Digital Support, Unimpeded Communication: The Development, Support and Promotion of AI-assisted Communication Assistive Devices for Speech Impairment

A voice with warmth deserves to be heard by the world. A dialogue with wisdom brings services closer to the heart.

Funding Agency

National Science and Technology Council (NSTC)

Project Duration

May 1, 2023 - April 30, 2026

Grant Number

NSTC 113-2425-H-305-003-

Host Institution

National Taipei University

Project Overview

Mission Statement

This NSTC-funded three-year project (2023–2026) aims to establish AI-based inclusive communication assistive systems for speech-impaired individuals, integrating four sub-projects covering hardware, AI model development, multimodal dialogue systems, and field testing.

Sub-project 1

Development of embedded hardware and speech signal assistive modules.

Sub-project 2

Design of adaptive AI communication models and user interfaces.

Sub-project 3

Construction of multimodal cross-lingual task-oriented dialogue systems for inclusive communication.

Sub-project 4

Field testing, user validation, and technology dissemination.

Foundation & Design

Needs assessment, prototype design, and integration framework setup.

System Development

AI model training, dialogue integration, and multimodal enhancement.

Testing & Application

Field testing, performance optimization, and cross-sector application.

Sub-project 3

Multimodal Cross-lingual Task-Oriented Dialogue System for Inclusive Communication Support

Research Assistant Responsibilities

Designing and implementing a RAG-based multimodal dialogue system integrating text, speech, and image recognition for accessible, multilingual, and adaptive communication.

Research Objectives

End-to-End Multimodal System

Develop a comprehensive dialogue system capable of understanding text, speech, and images simultaneously.

Cross-lingual Communication

Enable seamless interaction across Chinese, English, Taiwanese, and Vietnamese languages.

Social Welfare Integration

Deploy AI systems in partnership with organizations to provide accessible communication support.

Research Methodology

Needs Assessment & Data Collection

Conducted in-depth interviews with 6 social welfare organizations to identify real-world requirements and collected diverse data sources including FAQs and conversation logs.

AI-Enhanced Knowledge Base Construction

Utilized GPT-4o to generate comprehensive Q&A datasets and built multilingual knowledge bases optimized for specific organizational needs.

Iterative Development & Deployment

Implemented three-stage development: core validation with LINE Bot, feature expansion with voice integration, and interface consolidation with Gradio web platform.

Objectives & Key Features

- Develop inclusive AI dialogue systems for multimodal and multilingual communication.

- Integrate NLP, speech, and vision-based components to enhance accessibility.

- Apply RAG-enhanced retrieval for precise context-based responses.

- Design adaptive and user-friendly interfaces for assistive contexts.

- Conduct field deployment with NPO partners and assistive centers.

AI Intelligence

LLM + RAG-based comprehension and reasoning.

Multimodal Input

Text, speech, and image integrated communication.

Cross-lingual Dialogue

Supports Chinese, English, Taiwanese, and Vietnamese.

Task Orientation

Subsidy inquiry, assistive equipment info, and more.

Accessibility Design

Interface optimized for speech-impaired users.

Technical Approach

System Architecture

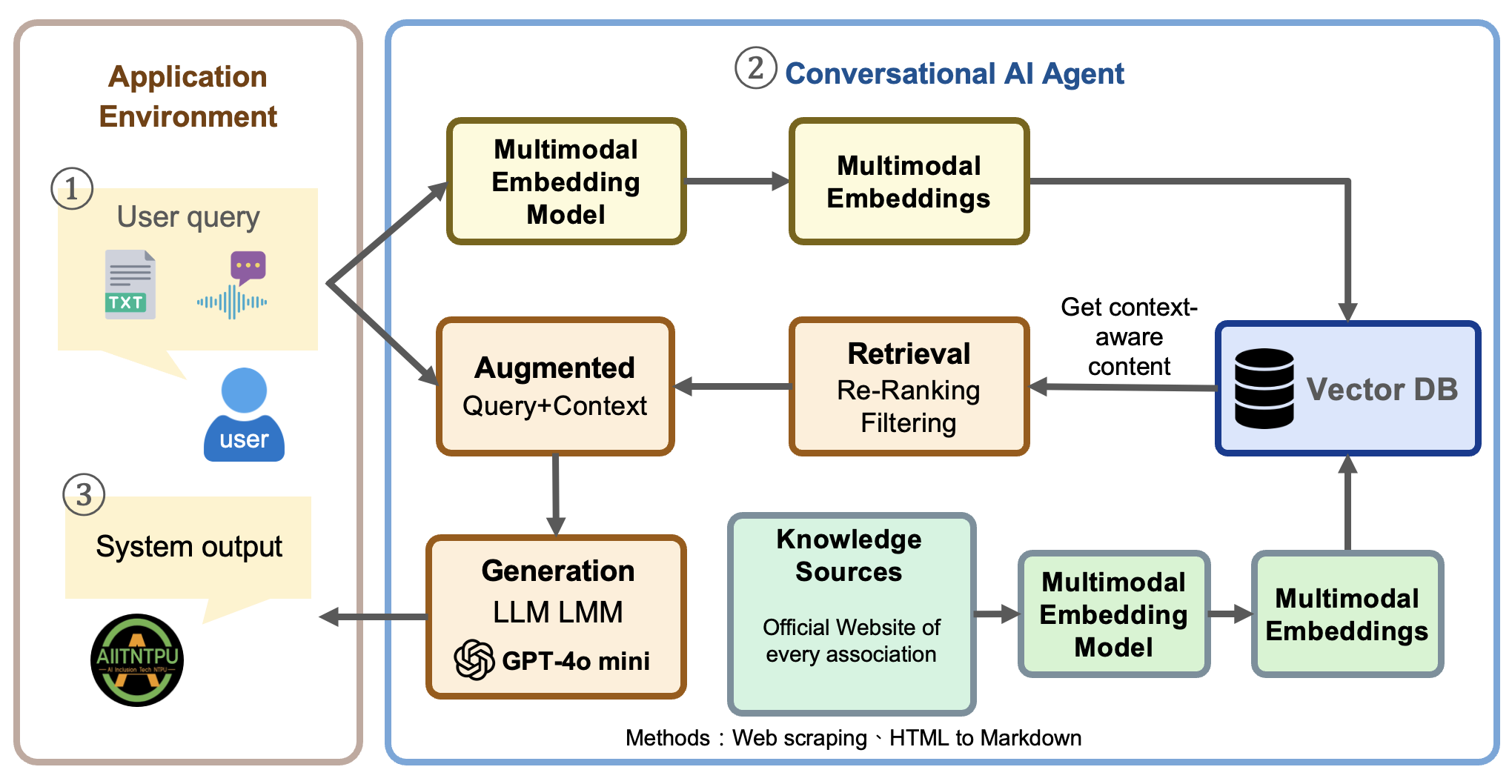

Core RAG-Enhanced Architecture

The system employs a sophisticated RAG (Retrieval-Augmented Generation) framework combining e5-base embeddings with FAISS vector database for efficient knowledge retrieval, and GPT-4o/Mistral for intelligent response generation with multilingual TTS output capabilities.

Figure: Subproject III System Architecture - Multimodal RAG Framework

Technical Implementation Stack

Embedding & Retrieval

- e5-base for multilingual text embeddings

- FAISS for efficient similarity search

- Custom vector database optimization

Generation & Reasoning

- GPT-4o-mini & Mistral for response generation

- Custom prompt engineering for domain-specific tasks

- Context-aware multilingual processing

Multimodal Processing

- Whisper for speech recognition

- Vision models for image understanding

- TTS with Meta MMS-TTS-ZAN for Taiwanese

Deployment & Integration

- Google Cloud Run for scalable hosting

- LINE Bot API for instant messaging

- Gradio for web-based multimodal interface

My Role & Responsibilities

Lead Research Assistant for Subproject III

As the lead research assistant, I coordinate the research team and serve as the primary technical contact with partner organizations. My responsibilities include designing and developing the multimodal dialogue system, writing code for system implementation, conducting needs assessments with NPO partners, authoring project reports, and overseeing the deployment process from development to field testing.

Key Roles

Current Achievements & Implementations

LINE Bot Partnerships

Deployed AI-powered LINE bots across 7 partner organizations

Total Responses

Cumulative responses served since deployment in June 2024

Multimodal System

Gradio-based system supporting text, voice, and image inputs with multilingual capabilities

Partner Organizations

We are collaborating with leading social welfare organizations to deploy our AI-powered communication systems:

中華民國腦性麻痺協會

The Cerebral Palsy Association of R.O.C.

漸凍人協會

Taiwan Motor Neuron Disease Association

陽光社會福利基金會

Sunshine Social Welfare Foundation

台北市基督教勵友中心

Good Friend Mission

行無礙資源推廣協會

Taiwan Access for All Association

桃園市北區輔具資源中心

Taoyuan North District Assistive Technology Center

連江縣早期療育資源中心

Matsu Early Intervention Resource Center

Gradio Multimodal Interface

Web-based Multimodal Dialogue Platform

Our Gradio-based web interface provides a comprehensive multimodal communication platform, supporting text input, voice recording, and image uploads with real-time multilingual responses. The interface is designed for accessibility and cross-device compatibility.

Multimodal Input Support

Seamlessly integrates text typing, voice recording, and image upload capabilities in a single unified interface.

Cross-lingual Processing

Real-time language detection and response generation in Chinese, English, Taiwanese, and Vietnamese.

Accessibility Focused

Designed specifically for users with speech impairments.

Try the Multimodal Interface

Experience our advanced multimodal dialogue system through the web interface:

Access Gradio InterfaceInterface will be available soon

System Implementations

LINE Bot Deployment

Multi-organization chatbot system with RAG-enhanced knowledge base, supporting text and voice interactions for immediate assistance.

Gradio Multimodal Interface

Web-based platform integrating text, speech, and image input capabilities with cross-lingual support including Chinese, English, and Vietnamese.

Expected Impact & Outcomes

Research Contribution

Advancing the field of inclusive AI and multimodal communication systems

Social Impact

Improving quality of life for individuals with speech impairments

Technological Innovation

Pioneering new approaches in AI-assisted communication

Commercial Potential

Creating market-ready assistive communication technologies

Related Publications & Presentations

TWSC2 2025 Conference

Implementing an Inclusive Communication System with RAG-enhanced Multilingual and Multimodal Dialogue Capabilities

Cheng-Yun Wu, Bor-Jen Chen, Wen-Hsin Hsiao, Hsin-Ting Lu, Yue-Shan Chang, Chen-Yu Chiang, Chao-Yin Lin, Yu-An Lin and Min-Yuh Day

Project Social Media & News Coverage

Follow Our Project

Media Coverage & Reports

Our project has been featured in various media outlets, highlighting the impact of AI-assisted communication technology:

Yahoo News Taiwan

Using AI, National Taipei University strives to remove communication barriers

Read MoreCharming SciTech

Opening the Door of Silence: How AI is Transforming the Future of Communication for the Speech-Impaired

Read MoreVoice of National Chengchi University Radio Station

NSTC Launches 'Inclusive Technology' Initiative Focused on Digital Equality for Disadvantaged Groups

Read MoreBusiness Next

Six people can no longer speak! 20 faculty and students at National Taipei University 'work hard on a non-profit project' to help AI speak for the speech-impaired

Read MoreYouTube Introduction

[ReVoice Project Team] Subproject III — Multimodal Cross-lingual Task-Oriented Dialogue System for Inclusive Communication Support

Yahoo News Taiwan

AI Gives Voice Back to the Speech-Impaired: Professor and Disabled PhD Student Develop Real-Time Translation Software

Read MoreMedia Impact

Our project has garnered significant media attention, helping to raise awareness about AI-assisted communication technology and its potential to improve the lives of individuals with speech impairments. This coverage has also facilitated connections with additional partner organizations and stakeholders in the assistive technology community.